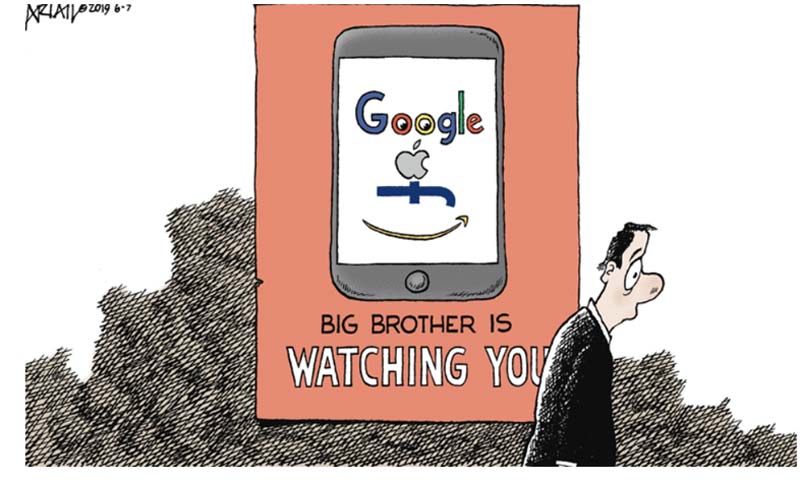

Google on Tuesday updated its ethical guidelines around artificial intelligence, removing commitments not to apply the technology to weapons or surveillance.

The company’s AI principles previously included a section listing four "Applications we will not pursue." As recently as Jan. 30 that included weapons, surveillance, technologies that "cause or are likely to cause overall harm," and use cases contravening principles of international law and human rights, according to a copy hosted by the Internet Archive.

In a blog post published Tuesday, Google’s head of AI Demis Hassabis and the company’s senior vice president for technology and society James Manyika said Google was updating its AI principles because the technology had become much more widespread, and because there was a need for companies based in democratic countries to serve government and national security clients.

"There’s a global competition taking place for AI leadership within an increasingly complex geopolitical landscape. We believe democracies should lead in AI development, guided by core values like freedom, equality, and respect for human rights. And we believe that companies, governments, and organizations sharing these values should work together to create AI that protects people, promotes global growth, and supports national security," the two executives wrote.

Google’s updated AI principles page includes provisions that say the company will use human oversight and take feedback in order to ensure that its technology was used in line with "widely accepted principles of international law and human rights." The principles also say the company will test its technology to "mitigate unintended or harmful outcomes."

A spokesperson for Google declined to answer specific questions about Google’s policies on weapons and surveillance.

Investors and executives behind Silicon Valley's rapidly expanding defense sector frequently invoke Google employee pushback against Maven as a turning point within the industry.

Google first published its AI principles in 2018 after employees protested a contract with the Pentagon applying Google’s computer vision algorithms to analyze drone footage. The company also opted not to renew the contract.

An open letter protesting the contract, known as Maven, and signed by thousands of employees addressed to CEO Sundar Pichai stated that "We believe that Google should not be in the business of war."

(COMMENT, BELOW)

Contact The Editor

Contact The Editor

Articles By This Author

Articles By This Author